From Editing to Mastering: AI Research Insights at ISMIR 2025

Sony AI

Events

September 29, 2025

At ISMIR 2025 in Daejeon, South Korea, Sony AI and its collaborators presented four new research projects that explore how AI can support music creators and producers. From editing existing tracks with words, to refining mastering workflows, to understanding the role of audio effects, and even making music identification more robust in noisy environments; these projects demonstrate AI’s potential to support creativity and creators.

Rather than replacing artistry, each study shows where technology might reduce technical barriers and give sound and audio creators and rights holders more precise and flexible tools to shape, protect, and share their work.

Editing Music with Words

Instruct-Musicgen: Unlocking Text-To-Music Editing For Music Language Models Via Instruction Tuning

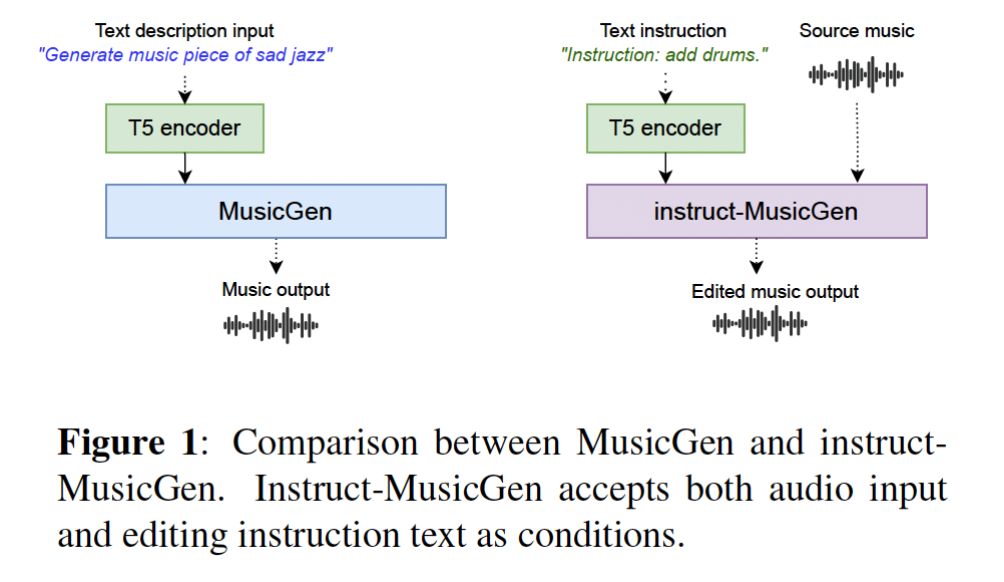

Music creation rarely ends with the first draft. Artists and producers shape and reshape their work as part of the creative process. They add instruments, remove parts, and refine the mix to bring their vision to life. While AI models like MusicGen explore the possibilities for music generation from text prompts, the ability to edit existing tracks with words has remained out of reach.

That’s where Instruct-MusicGen comes in. Developed by a team from Sony AI, Queen Mary University of London, and Music X Lab at MBZUAI, this new research introduces a flexible, lightweight approach to text-to-music editing.

The Why

Previous explorations on the potential for music editing faced two major hurdles:

Training costs: Specialized editing models required massive datasets and training time, making them resource-intensive and hard to scale. Compounding this, most music generation models have relied on private “licensed” datasets, but the actual terms or scope of those licenses are often unclear. For example, the MusicGen paper cites 20K hours of licensed music drawn from internal and commercial libraries like ShutterStock and Pond5. Our work builds on the publicly available MusicGen model and synthesizes an instructional dataset using the Slakh2100 dataset, released under Creative Commons 4.0. This research is not for commercial use.

Audio fidelity: Approaches that leaned on large language models could interpret instructions but often produced edits with degraded sound quality.

For creators, this meant limited tools; meaning, either overly rigid systems or outputs that lacked the polish needed for real production.

Enter Instruct-MusicGen

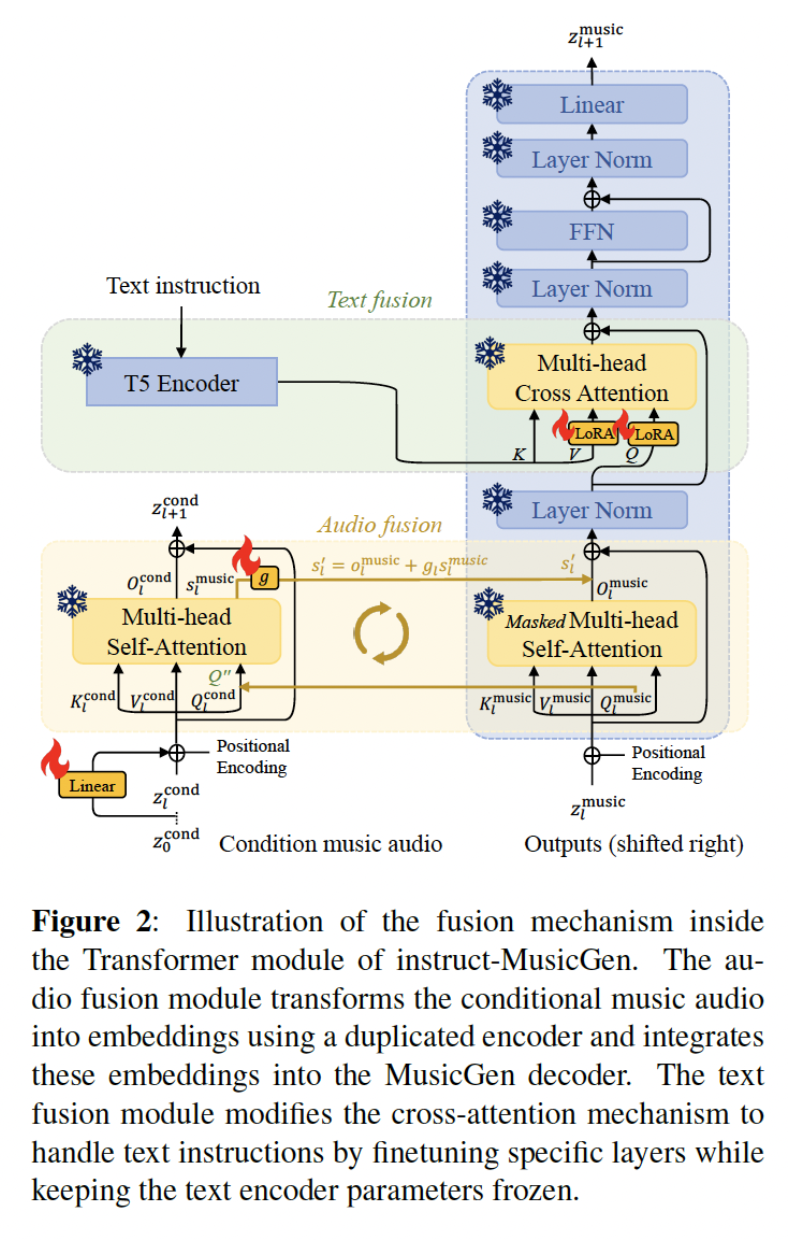

Instruct-MusicGen enhances the original MusicGen model by adding two lightweight modules:

Audio Fusion Module: allows the model to condition on an existing track.

Text Fusion Module: enables the model to follow specific instructions like “add guitar” or “remove drums.”

By combining these modules, the model can take both audio and text inputs and produce edited music.

The efficiency gains are striking: only ~8 percent additional parameters and just 5,000 training steps which is less than 1 percent of the cost of training a new model from scratch.

Why It Matters

This means there is the potential for edits to generate music to be made more quickly and intuitively. Instead of wrestling with complex tools, they can describe what they want in natural language.

- -Flexible editing: Add, remove, or separate stems using a single model.

- -Efficiency: Lightweight training makes the model scalable and adaptable.

- -Quality: Evaluations show state-of-the-art results on both synthetic datasets (Slakh2100) and real-world tracks (MoisesDB).

In listening tests, participants rated Instruct-MusicGen higher than baselines like AUDIT and M2UGen for both instruction adherence and audio quality.

Breaking New Ground

This research advances three new ideas:

- -Instruction tuning for music: adapting a proven technique from text/image AI into the music domain.

- -Lightweight design: minimal parameter increase, fast training, and strong results.

- -Creator empowerment: a tool that reduces technical friction and expands creative possibilities.

Toward the Future of Music Editing

Instruct-MusicGen highlights how we are exploring and learning about how AI can impact the creative process. It gives creators new ways to experiment, refine, and collaborate with technology, while leaving artistic choices firmly in their hands.

The project was led by Yixiao Zhang (Queen Mary University of London / Sony AI internship), Yukara Ikemiya, Woosung Choi, Naoki Murata, Marco A. Martínez-Ramírez, Wei-Hsiang Liao, Yuki Mitsufuji (Sony AI), Liwei Lin, Gus Xia (Music X Lab, MBZUAI), and Simon Dixon (Queen Mary University of London).

The code, model weights, and demos are available here: https://github.com/ldzhangyx/instruct-musicgen?

Fine-Tuning the Final Touch

ITO-Master: Inference-Time Optimization For Audio Effects Modeling Of Music Mastering Processors

Mastering is often called the final polish in music production. It’s the stage where tracks gain balance, loudness, and clarity before release. This step requires expert engineers with a finely tuned ear.

AI cannot replace that artistry—nor should it. Instead, our goal at Sony AI is to augment the process, doing research that explores how to give creators new ways to explore, refine, and adapt their sound. Automated tools can handle the repetitive technical setup, while musicians and engineers stay in control of the creative choices.

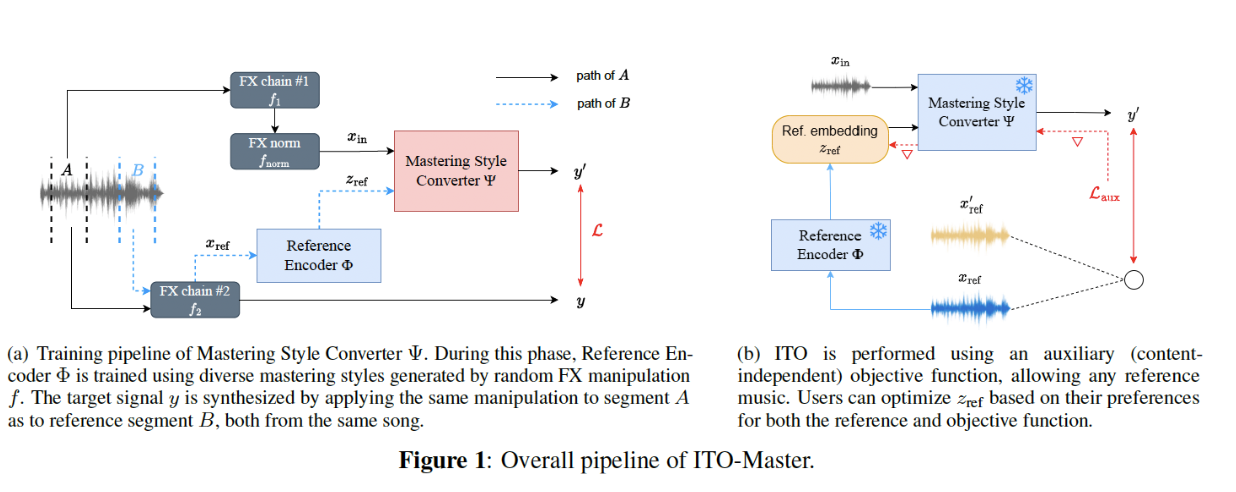

That philosophy underpins ITO-Master, a new research framework presented at ISMIR 2025. Designed to make mastering style transfer more flexible and artist-centered, ITO-Master uses Inference-Time Optimization (ITO) to let creators adjust and fine-tune results on the fly. r.

The Challenge

Automated mastering tools already exist, but most apply processing in a fixed way, leaving users little control. Reference-based systems (which transfer the mastering style of one track onto another) offered more promise, but still lacked flexibility.

Once a track was processed, if the sound didn’t quite fit the artist’s vision, there was no easy way to refine it. Adjustments meant starting over, retraining, or compromising. For creative professionals, this rigidity was a serious limitation.

Enter ITO-Master

ITO-Master introduces ITO into the mastering workflow. Rather than locking in the reference style at the start, the model keeps the door open for adjustments during inference. This means that even after the initial style transfer is applied, users can continue refining results by optimizing only the reference embedding (zref) at inference time, without retraining the full model.

In evaluations, ITO-Master was compared against both pre-AI and AI baselines. Pre-AI methods included “Matchering,” an open-source signal-processing tool for automatic mastering, and “Fx-Normalization,” another signal-processing approach. On the AI side, the team used an open-source end-to-end remastering model (E2E Remastering). No commercial systems were included in the comparison.

Here’s how it works:

Reference Embedding Optimization: ITO-Master focuses on tuning the reference embedding (zref) at inference time. Instead of retraining the entire system, ITO-Master adjusts only zref while keeping the rest of the model fixed, giving creators precise control without starting over.

Black-box and White-box Approaches: The framework was tested in both paradigms: black-box neural networks that directly model audio, and white-box models using a structured differentiable mastering chain with interpretable parameters.

Micro-level Adjustments: Whether enhancing clarity, tightening dynamics, or altering stereo width, users can nudge the system toward their preferred result, even using text prompts (such as, “make it sound more like hip-hop”).

Why It Matters

This flexibility is key. Mastering isn’t one-size-fits-all. A track’s final character often comes down to subtle choices. By enabling fine-grained refinements, ITO-Master bridges the gap between automation and artistry.

Listening tests reinforced this: in a MUSHRA-style evaluation with experienced music producers, ITO-Master consistently outperformed all baseline systems, with statistically significant improvements (p < 0.05). Beyond numbers, the framework also demonstrated creative versatility. In one experiment, users steered mastering with simple text prompts—“Classic Music,” “Metal Music,” or “Hip-Hop Music”—and the system adjusted tonal balance and dynamics in line with genre expectations, from brighter classical recordings to bass-heavy hip-hop mixes.

Together, these results showed:

-Improved style similarity: Objective metrics and listening tests confirmed that ITO-Master outputs matched the feel of reference tracks more closely than both traditional feature-matching systems (Fx-Normalization, Matchering) and an AI-based end-to-end remastering model.

-Greater adaptability: Compared to these baselines, ITO-Master offered consistent improvements when users optimized at inference time, especially with the white-box model’s interpretable mastering chain.

-Creative steering: With text-conditioned optimization, the model responded to prompts like “Classic Music”, “Metal Music”, or “Hip-Hop Music”, shifting tonal balance and dynamics in genre-consistent ways.

Breaking New Ground

The research highlights two important advances:

- 1. Inference-time adaptability which is a practical way to refine mastering results without rebuilding or retraining the system.

- 2. Genre-sensitive responsiveness, meaning, qualitative tests showed the model could meaningfully adjust to text prompts, opening the door to intuitive human–AI collaboration.

Toward the Future

ITO-Master represents an exploration into how and where AI might provide an intelligent, controllable mastering capability. By combining the efficiency of automation with the nuance of human input, we can begin to test how tools might serve both professionals seeking precision and creators exploring new sonic directions.

The work was led by Junghyun Koo, Marco A. Martínez-Ramírez, Wei-Hsiang Liao (Sony AI), Giorgio Fabbro, Michele Mancusi (Sony Europe), and Yuki Mitsufuji (Sony AI / Sony Group).

Code and demos are available on GitHub:

https://github.com/SonyResearch/ITO-Master

Unlocking Instrument-Wise Effects Understanding

Fx-Encoder++: Extracting Instrument-Wise Audio Effects Representations From Mixtures

Music is more than melody and rhythm. It’s also shaped by the subtle art of audio effects. Reverb, compression, distortion, delay: these are the tools that give recordings depth, presence, and emotion. Yet, while research into AI methods has shown the possible ways it can understand music content, it has struggled to fully grasp how effects shape the sound of individual instruments within a mix.

That’s the challenge addressed by Fx-Encoder++, a new research pathway explored using the Creative Commons public MoisesDB Dataset. This work was developed by researchers at Sony AI and National Taiwan University, presented this year at ISMIR 2025 in Daejeon, South Korea.

The Challenge

Imagine a producer balancing a track. The bass might need compression, the vocals reverb, and the drums a touch of delay. Traditional AI models could detect “there are effects here,” but they lacked the ability to pinpoint which instrument was affected and how.

Most prior methods treated the mix as a whole, blurring the individual impact of effects. This gap limited AI’s usefulness for tasks like automatic mixing or style transfer, where precision matters.

Attempts to solve this often relied on source separation—splitting instruments first, then analyzing effects. But this approach introduced artifacts, such as lost high frequencies or smearing, distorting the very qualities that needed to be measured.

Enter Fx-Encoder++

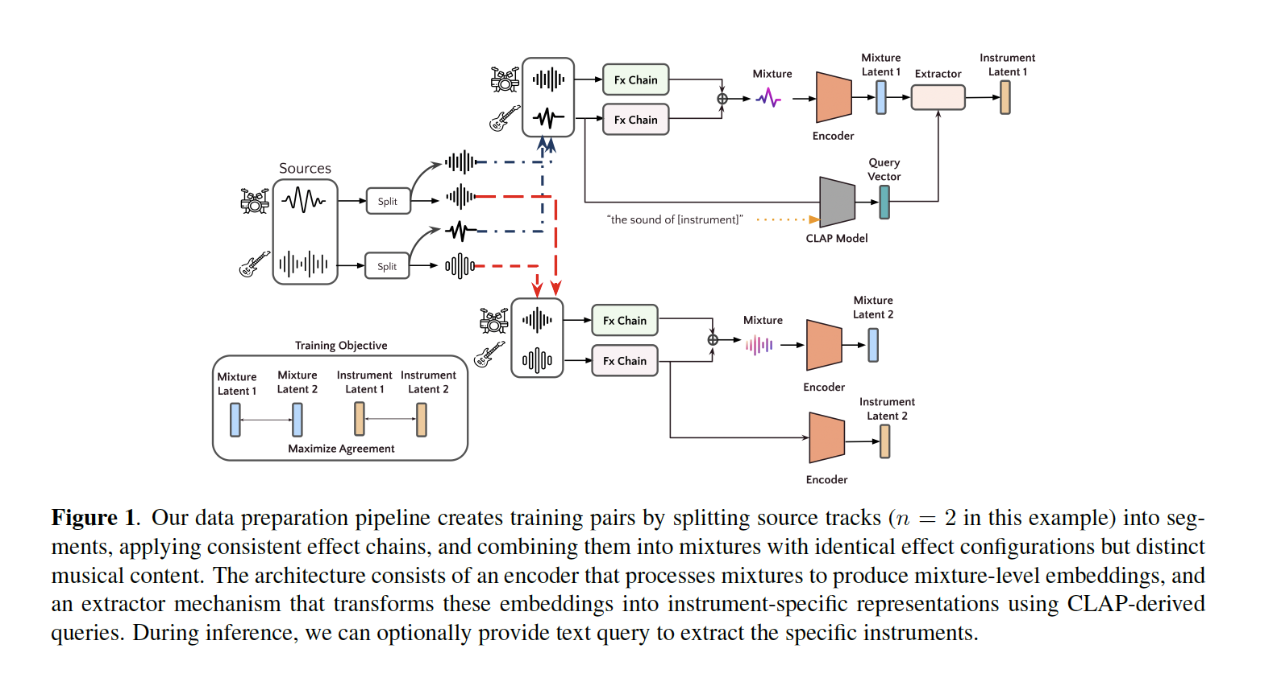

Fx-Encoder++ takes a different path. Rather than relying on separation, the model learns to extract instrument-wise audio effects representations directly from a full mixture.

It does this with a contrastive learning framework inspired by SimCLR, introduced at ICLR in 2020 it offered a simple framework for contrastive learning of visual representations, this input enriched this research with several key design choices:

- -Fx-Normalization – leveling out inherent effects in recordings so the model can focus on applied transformations.

- -Consistent instrument composition – ensuring training samples use the same instrument types to avoid shortcuts.

- -An “extractor” mechanism – the breakthrough feature, which uses instrument queries (via audio or text) to transform mixture-level embeddings into instrument-specific representations.

With this design, Fx-Encoder++ bridges the gap between mixture-level awareness and instrument-level precision, making it possible to ask: what effects are shaping the bass here? the vocals? the guitar?

Why It Matters

By enabling instrument-specific effects understanding, Fx-Encoder++ could pave the way for new perspectives and insight into a future that includes new music production tools. Automatic mixing, audio effects style transfer, and creative production workflows could benefit.

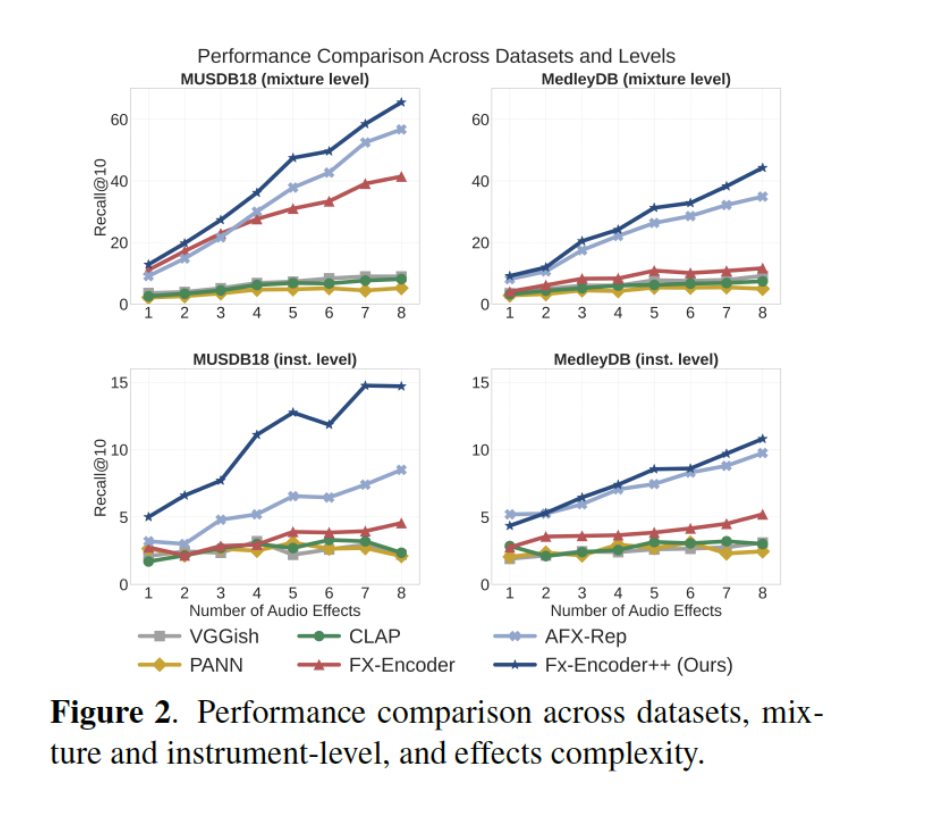

Tests show the model outperforms existing approaches such as FX-Encoder and AFx-Rep on benchmark datasets like MUSDB18 and MedleyDB, particularly in identifying effects applied to bass and drums. Importantly, Fx-Encoder++ also demonstrated that even with text-based queries (e.g., “this is the sound of trumpet”), it could successfully extract effects embeddings, opening the door for more intuitive, human-centered interfaces.

Breaking New Ground

The results highlight two key achievements:

- -Superior retrieval accuracy – Fx-Encoder++ consistently ranked higher in recall compared to general-purpose models (CLAP, PANN, VGGish) and effect-specific baselines.

- -Instrument-wise extraction – even without external source separation, the model could directly identify instrument-specific effects, a first for this field.

While challenges remain (such as improving performance on vocals and refining single-effect detection) the work marks a significant step toward AI systems that understand not just what instruments are playing, but how they are shaped by production choices.

The Future of Intelligent Music Production

Fx-Encoder++ underscores a broader shift in AI for music: from recognizing content to modeling the creative decisions behind it. By capturing instrument-level effects directly from complex mixtures, this research opens doors to tools that can support musicians, producers, and engineers.

The project was led by Yen-Tung Yeh (National Taiwan University), Junghyun Koo, Marco A. Martínez-Ramírez, Wei-Hsiang Liao, Yi-Hsuan Yang (National Taiwan University), and Yuki Mitsufuji (Sony AI), with code released to the research community:

https://github.com/SonyResearch/Fx-Encoder_PlusPlus

Making Music Identification More Robust

Enhancing Neural Audio Fingerprint Robustness to Audio Degradation for Music Identification

Music identification systems like Shazam work by creating “audio fingerprints.” These are compact digital signatures that let a track be recognized even when it’s played in noisy or degraded conditions. But today’s neural audio fingerprinting (AFP) models often fall short in real-world scenarios, struggling with background noise, reverberation, or microphone quality.

The Challenge

Most AFP research trains models with synthetic degradations, which don’t fully capture the messy conditions of live music venues, clubs, or streaming platforms. In addition, neural AFP has leaned heavily on a single loss function (NT-Xent) without fully exploring alternatives. This combination has limited robustness and scalability.

Enter Neural Music Fingerprinting (NMFP)

Sony AI and collaborators developed a new approach that focuses on realistic audio degradations and improved training strategies. Instead of simulating noise narrowly, NMFP draws from diverse acoustic datasets, including recordings of actual rooms, microphones, and public spaces. It also corrects flaws in prior evaluation methods and introduces best practices like:

- -Avoiding false negatives during training.

- -Preserving low frequencies critical for real-world recognition.

- -Incorporating full impulse responses to better simulate room acoustics.

On the optimization side, the team systematically compared multiple loss functions. Contrary to common belief, the classic triplet loss outperformed newer methods like NT-Xent, proving more effective at distinguishing tracks under noisy conditions.

Why It Matters

The results were striking: NMFP outperformed existing baselines by more than 20% in real-world tests across bars, nightclubs, and concert halls. This means more reliable recognition of music even when played in unpredictable environments.

Breaking New Ground

- Realistic degradations: Training with diverse room and microphone recordings instead of narrow synthetic noise.

- Improved loss functions: Showing that triplet loss, with careful tuning, is better suited for AFP than commonly used alternatives.

- State-of-the-art results: Delivering significant gains on both synthetic benchmarks and live-recorded datasets.

Toward the Future of Music Identification

NMFP provides a stronger foundation for music identification, broadcast monitoring, and rights management. By building models that recognize songs under real-life conditions, this research has the potential to support efforts to ensure creators are credited — and compensated — no matter where their music is played.

The project was led by R. Oguz Araz (Universitat Pompeu Fabra), Guillem Cortès-Sebastià (BMAT), Emilio Molina (BMAT), Joan Serrà (Sony AI), Xavier Serra (Universitat Pompeu Fabra), Yuki Mitsufuji (Sony AI / Sony Group), and Dmitry Bogdanov (Universitat Pompeu Fabra).

Code and pre-trained models are openly available here: https://github.com/raraz15/neural-music-fp

Conclusion

Taken together, these four research pathways explore a broader direction for where AI in music could impact sound and audio creation: tools that respect the nuances of creativity while expanding what’s possible in production, mastering, and identification. By understanding more about how to make systems more intuitive, adaptable, and reliable in real-world conditions, Sony AI’s research at ISMIR 2025 underscores a commitment to empowering those who make and care for music.

As the field continues to evolve, these contributions provide both practical methods and open resources that the wider community can build upon.

Latest Blog

February 2, 2026 | Sony AI

Advancing AI: Highlights from January

January set the tone for the year ahead at Sony AI, with work that spans foundational research, scientific discovery, and global engagement with the research community.This month’s…

January 30, 2026 | Sony AI

Sony AI’s Contributions at AAAI 2026

Sony AI’s Contributions at AAAI 2026AAAI 2026 is a reminder that progress in AI isn’t one straight line. This year’s Sony AI contributions span improving and enhancing continual le…

January 26, 2026 | Sony AI

How Sony AI’s Scientific Discovery Team is Reimagining How Researchers Evaluate …

In today’s research landscape, thousands of scientific papers are published each day; a metaphorical sea of knowledge. Even domain experts struggle to keep up. As Pablo Sánchez Mar…