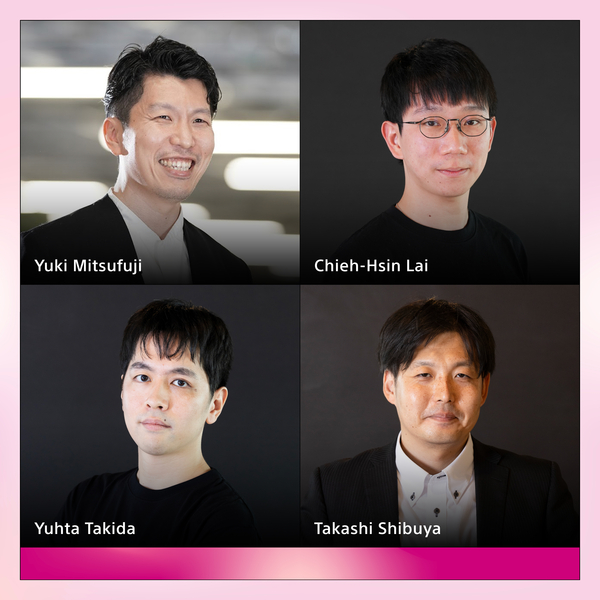

Chieh-Hsin

Lai

Profile

Since 2021, Jesse has worked as a research scientist at Sony AI, focusing on robustness, deep generative models, and theoretical deep learning. Prior to Sony AI, he worked as a Research Assistant at the Institute of Mathematics Academia Sinica. Jesse earned his PhD in Mathematics from the University of Minnesota - Twin Cities.

Message

“I specialize in developing theoretical grounded deep generative models that excel in producing high-fidelity samples, rapid sampling, ease of training, and controllable generation. I expect to unlock the black-box nature of deep generative modeling through the application of advanced mathematical tools. With a focus on innovation and precision, I am dedicated to pushing the boundaries of artificial intelligence and contributing to the advancement of cutting-edge technology.”

Publications

Consistency Training (CT) has recently emerged as a promising alternative to diffusion models, achieving competitive performance in image generation tasks. However, non-distillation consistency training often suffers from high variance and instability, and analyzing and impr…

We demonstrate the efficacy of using intermediate representations from a single foundation model to enhance various music downstream tasks. We introduce SoniDo, a music foundation model (MFM) designed to extract hierarchical features from target music samples. By leveraging …

By embedding discrete representations into a continuous latent space, we can leverage continuous-space latent diffusion models to handle generative modeling of discrete data. However, despite their initial success, most latent diffusion methods rely on fixed pretrained embed…

Deep Generative Models (DGMs), including Energy-Based Models (EBMs) and Score-based Generative Models (SGMs), have advanced high-fidelity data generation and complex continuous distribution approximation. However, their application in Markov Decision Processes (MDPs), partic…

Consistency Training (CT) has recently emerged as a promising alternative to diffusion models, achieving competitive performance in image generation tasks. However, non-distillation consistency training often suffers from high variance and instability, and analyzing and impr…

Music timbre transfer is a challenging task that involves modifying the timbral characteristics of an audio signal while preserving its melodic structure. In this paper, we propose a novel method based on dual diffusion bridges, trained using the CocoChorales Dataset, which …

Existing work on pitch and timbre disentanglement has been mostly focused on single-instrument music audio, excluding the cases where multiple instruments are presented. To fill the gap, we propose DisMix, a generative framework in which the pitch and timbre representations …

Sound content is an indispensable element for multimedia works such as video games, music, and films. Recent high-quality diffusion-based sound generation models can serve as valuable tools for the creators. However, despite producing high-quality sounds, these models often …

In typical multimodal contrastive learning, such as CLIP, encoders produce onepoint in the latent representation space for each input. However, one-point representation has difficulty in capturing the relationship and the similarity structure of a huge amount of instances in…

Controllable generation through Stable Diffusion (SD) fine-tuning aims to improve fidelity, safety, and alignment with human guidance. Existing reinforcement learning from human feedback methods usually rely on predefined heuristic reward functions or pretrained reward model…

Diffusion models have seen notable success in continuous domains, leading to the development of discrete diffusion models (DDMs) for discrete variables. Despite recent advances, DDMs face the challenge of slow sampling speeds. While parallel sampling methods like -leaping ac…

Sound content creation, essential for multimedia works such as video games and films, often involves extensive trial-and-error, enabling creators to semantically reflect their artistic ideas and inspirations, which evolve throughout the creation process, into the sound. Rece…

To accelerate sampling, diffusion models (DMs) are often distilled into generators that directly map noise to data in a single step. In this approach, the resolution of the generator is fundamentally limited by that of the teacher DM. To overcome this limitation, we propose …

Generating novel views from a single image remains a challenging task due to the complexity of 3D scenes and the limited diversity in the existing multi-view datasets to train a model on. Recent research combining large-scale text-to-image (T2I) models with monocular depth e…

Contrastive cross-modal models such as CLIP and CLAP aid various vision-language (VL) and audio-language (AL) tasks. However, there has been limited investigation of and improvement in their language encoder, which is the central component of encoding natural language descri…

Vector quantization (VQ) is a technique to deterministically learn features with discrete codebook representations. It is commonly performed with a variational autoencoding model, VQ-VAE, which can be further extended to hierarchical structures for making high-fidelity recon…

Restoring degraded music signals is essential to enhance audio quality for downstream music manipulation. Recent diffusion-based music restoration methods have demonstrated impressive performance, and among them, diffusion posterior sampling (DPS) stands out given its intrin…

Generative adversarial networks (GANs) learn a target probability distribution by optimizing a generator and a discriminator with minimax objectives. This paper addresses the question of whether such optimization actually provides the generator with gradients that make its d…

Despite the recent advancements, conditional image generation still faces challenges of cost, generalizability, and the need for task-specific training. In this paper, we propose Manifold Preserving Guided Diffusion (MPGD), a training-free conditional generation framework th…

Consistency Models (CM) (Song et al., 2023) accelerate score-based diffusion model sampling at the cost of sample quality but lack a natural way to trade-off quality for speed. To address this limitation, we propose Consistency Trajectory Model (CTM), a generalization encomp…

Restoring degraded music signals is essential to enhance audio quality for downstream music manipulation. Recent diffusion-based music restoration methods have demonstrated impressive performance, and among them, diffusion posterior sampling (DPS) stands out given its intrin…

Pre-trained diffusion models have been successfully used as priors in a variety of linear inverse problems, where the goal is to reconstruct a signal from noisy linear measurements. However, existing approaches require knowledge of the linear operator. In this paper, we prop…

Score-based generative models learn a family of noise-conditional score functions corresponding to the data density perturbed with increasingly large amounts of noise. These perturbed data densities are tied together by the Fokker-Planck equation (FPE), a partial differentia…

Removing reverb from reverberant music is a necessary technique to clean up audio for downstream music manipulations. Reverberation of music contains two categories, natural reverb, and artificial reverb. Artificial reverb has a wider diversity than natural reverb due to its…

One noted issue of vector-quantized variational autoencoder (VQ-VAE) is that the learned discrete representation uses only a fraction of the full capacity of the codebook, also known as codebook collapse. We hypothesize that the training scheme of VQ-VAE, which involves some…

Blog

May 10, 2024 | Events | Sony AI

Revolutionizing Creativity with CTM and SAN: Sony AI's Groundbreaking Advances in Generative AI for Creators

In the dynamic world of generative AI, the quest for more efficient, versatile, and high-quality models continues to push forward without any reduction in intensity. At the forefront of this technological evolution are Sony AI's r…

In the dynamic world of generative AI, the quest for more efficient, versatile, and high-quality models continues to push forward …

JOIN US

Shape the Future of AI with Sony AI

We want to hear from those of you who have a strong desire

to shape the future of AI.