Naoki

Murata

Publications

By embedding discrete representations into a continuous latent space, we can leverage continuous-space latent diffusion models to handle generative modeling of discrete data. However, despite their initial success, most latent diffusion methods rely on fixed pretrained embed…

In text-to-motion generation, controllability as well as generation quality and speed has become increasingly critical. The controllability challenges include generating a motion of a length that matches the given textual description and editing the generated motions accordi…

Prompt engineering is effective for controlling the output of text-to-image (T2I) generative models, but it is also laborious due to the need for manually crafted prompts. This challenge has spurred the development of algorithms for automated prompt generation. However, thes…

In typical multimodal contrastive learning, such as CLIP, encoders produce onepoint in the latent representation space for each input. However, one-point representation has difficulty in capturing the relationship and the similarity structure of a huge amount of instances in…

Controllable generation through Stable Diffusion (SD) fine-tuning aims to improve fidelity, safety, and alignment with human guidance. Existing reinforcement learning from human feedback methods usually rely on predefined heuristic reward functions or pretrained reward model…

To accelerate sampling, diffusion models (DMs) are often distilled into generators that directly map noise to data in a single step. In this approach, the resolution of the generator is fundamentally limited by that of the teacher DM. To overcome this limitation, we propose …

Generating novel views from a single image remains a challenging task due to the complexity of 3D scenes and the limited diversity in the existing multi-view datasets to train a model on. Recent research combining large-scale text-to-image (T2I) models with monocular depth e…

Contrastive cross-modal models such as CLIP and CLAP aid various vision-language (VL) and audio-language (AL) tasks. However, there has been limited investigation of and improvement in their language encoder, which is the central component of encoding natural language descri…

Vector quantization (VQ) is a technique to deterministically learn features with discrete codebook representations. It is commonly performed with a variational autoencoding model, VQ-VAE, which can be further extended to hierarchical structures for making high-fidelity recon…

Restoring degraded music signals is essential to enhance audio quality for downstream music manipulation. Recent diffusion-based music restoration methods have demonstrated impressive performance, and among them, diffusion posterior sampling (DPS) stands out given its intrin…

Generative adversarial networks (GANs) learn a target probability distribution by optimizing a generator and a discriminator with minimax objectives. This paper addresses the question of whether such optimization actually provides the generator with gradients that make its d…

Despite the recent advancements, conditional image generation still faces challenges of cost, generalizability, and the need for task-specific training. In this paper, we propose Manifold Preserving Guided Diffusion (MPGD), a training-free conditional generation framework th…

Consistency Models (CM) (Song et al., 2023) accelerate score-based diffusion model sampling at the cost of sample quality but lack a natural way to trade-off quality for speed. To address this limitation, we propose Consistency Trajectory Model (CTM), a generalization encomp…

Restoring degraded music signals is essential to enhance audio quality for downstream music manipulation. Recent diffusion-based music restoration methods have demonstrated impressive performance, and among them, diffusion posterior sampling (DPS) stands out given its intrin…

Although deep neural network (DNN)-based speech enhancement (SE) methods outperform the previous non-DNN-based ones, they often degrade the perceptual quality of generated outputs. To tackle this problem, we introduce a DNN-based generative refiner, Diffiner, aiming to impro…

Pre-trained diffusion models have been successfully used as priors in a variety of linear inverse problems, where the goal is to reconstruct a signal from noisy linear measurements. However, existing approaches require knowledge of the linear operator. In this paper, we prop…

Score-based generative models learn a family of noise-conditional score functions corresponding to the data density perturbed with increasingly large amounts of noise. These perturbed data densities are tied together by the Fokker-Planck equation (FPE), a partial differentia…

In this paper we propose a novel generative approach, DiffRoll, to tackle automatic music transcription (AMT).Instead of treating AMT as a discriminative task in which the model is trained to convert spectrograms into piano rolls, we think of it as a conditional generative t…

Removing reverb from reverberant music is a necessary technique to clean up audio for downstream music manipulations. Reverberation of music contains two categories, natural reverb, and artificial reverb. Artificial reverb has a wider diversity than natural reverb due to its…

One noted issue of vector-quantized variational autoencoder (VQ-VAE) is that the learned discrete representation uses only a fraction of the full capacity of the codebook, also known as codebook collapse. We hypothesize that the training scheme of VQ-VAE, which involves some…

Blog

May 10, 2024 | Events | Sony AI

Revolutionizing Creativity with CTM and SAN: Sony AI's Groundbreaking Advances in Generative AI for Creators

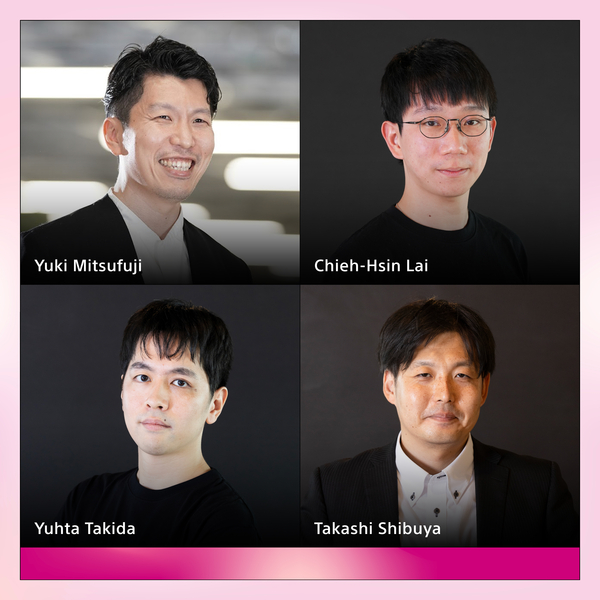

In the dynamic world of generative AI, the quest for more efficient, versatile, and high-quality models continues to push forward without any reduction in intensity. At the forefront of this technological evolution are Sony AI's r…

In the dynamic world of generative AI, the quest for more efficient, versatile, and high-quality models continues to push forward …

JOIN US

Shape the Future of AI with Sony AI

We want to hear from those of you who have a strong desire

to shape the future of AI.