Leading the way towards more ethical AI that protects the interests of AI users

and creators by pushing the industry to ensure AI technologies

are fair, transparent, and accountable.

Featured Initiative — FHIBE: A Fair Reflection

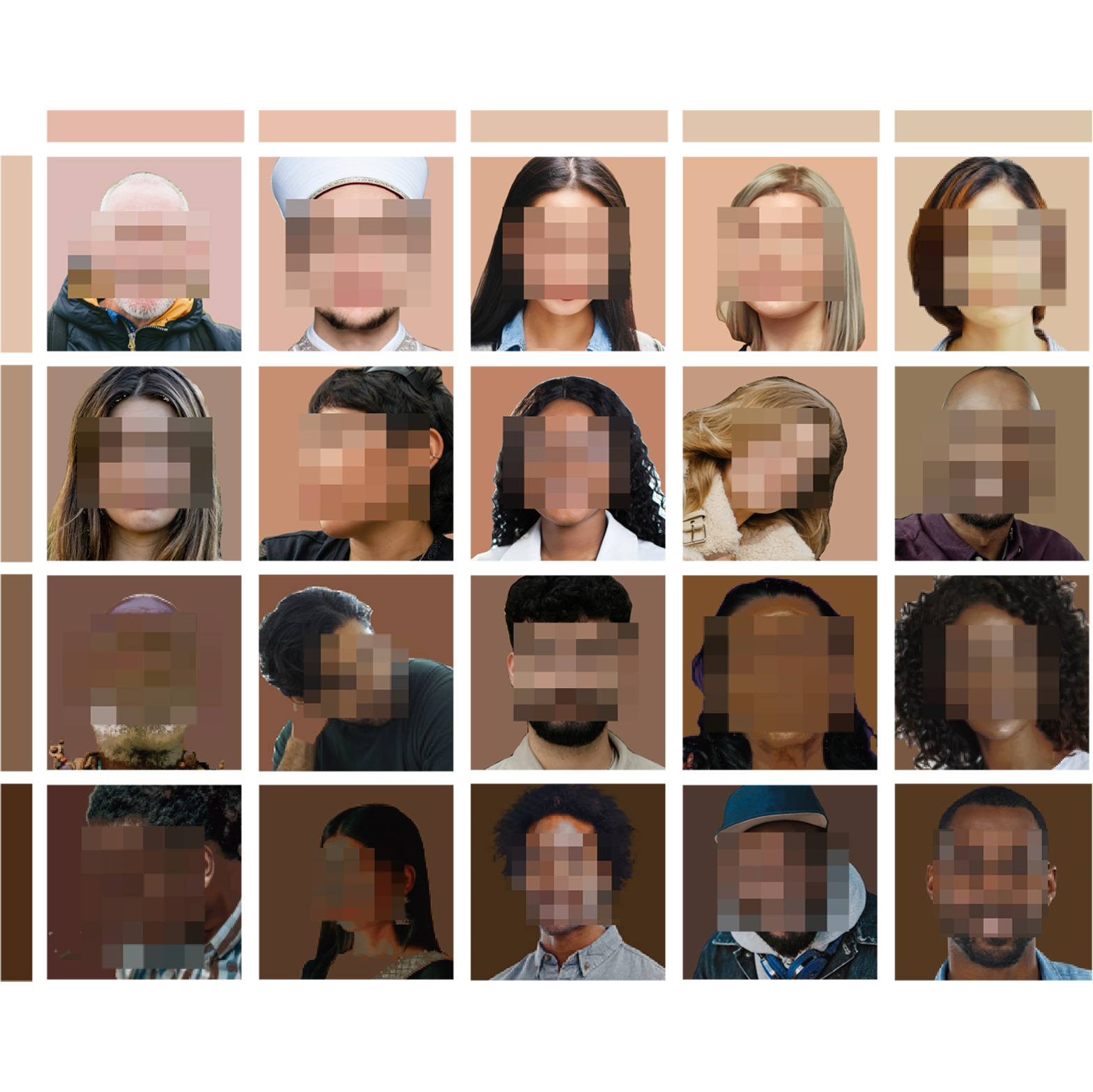

Sony AI’s Fair Human-Centric Image Benchmark (FHIBE) represents our latest effort to address dataset bias at scale. The Fair Human-Centric Image Benchmark (FHIBE) is the first publicly available globally diverse, consensually-collected fairness evaluation dataset for a wide variety of human-centric computer vision tasks. Learn how Sony AI is promoting ethical data collection and driving fairness in AI.

Watch the Film: A Fair Reflection

Explore how image datasets reflect deeper societal patterns—and how FHIBE offers a new approach.

Read the Nature Paper

Our peer-reviewed publication details the framework behind FHIBE and its potential to support fairness audits, transparency, and responsible AI evaluation.

Download the Benchmark Tool

FHIBE is more than a dataset—it’s a community resource. Download the benchmark tool and explore how it can help you assess and mitigate bias in image-based models.

Discover More on Our Blog

Dive into our FHIBE series where we unravel the research, offer use cases, explore web scraping and why it's unethical, and more.

Research Highlights from Our AI Ethics Flagship

At Sony AI, we approach AI ethics not as an afterthought, but as a foundation. Below are key highlights from our research efforts—each one offering a deeper look at how our team is reshaping responsible AI from the inside out.

A Taxonomy for Fair Dataset Curation Exploring the Challenges of Fair Dataset Curation

This research introduces a practical, three-part framework—composition, process, and release—for understanding fairness in real-world datasets. It captures the lived experiences of data curators and maps out the systemic and institutional barriers that get in the way of building equitable datasets.

A Simpler Tool to Fight Bias Mitigating Bias in AI Models: A New Approach with TAB

TAB (Targeted Augmentations for Bias Mitigation) addresses bias not by overhauling model architecture or using protected attributes—but by monitoring training history to identify spurious patterns. The result: a bias mitigation strategy that’s easy to implement and applicable across tasks.

Measuring Diversity with Precision Ushering in Needed Change in the Pursuit of More Diverse Datasets

Winner of Best Paper at ICML 2024, this work proposes a new standard for how we define, measure, and validate dataset diversity—using principles from measurement theory. It moves the field beyond vague claims and toward scientific rigor.

Moving Beyond Light vs. Dark Beyond Skin Tone: A Multidimensional Measure of Apparent Skin Color

By introducing hue (red vs. yellow) as an additional axis alongside tone (light vs. dark), this paper reveals previously undetected biases in computer vision. It proposes a new way to annotate and assess datasets and generative models for fairness.

From Research to Action

Sony AI Ethics Flagship : Reflecting on Our Progress and Purpose

In this comprehensive roundup, we trace our journey since 2020—from building governance frameworks to launching benchmark tools and pushing the boundaries of fairness in computer vision. Featuring commentary from Alice Xiang, the post showcases how ethical leadership is embedded into every part of our work.

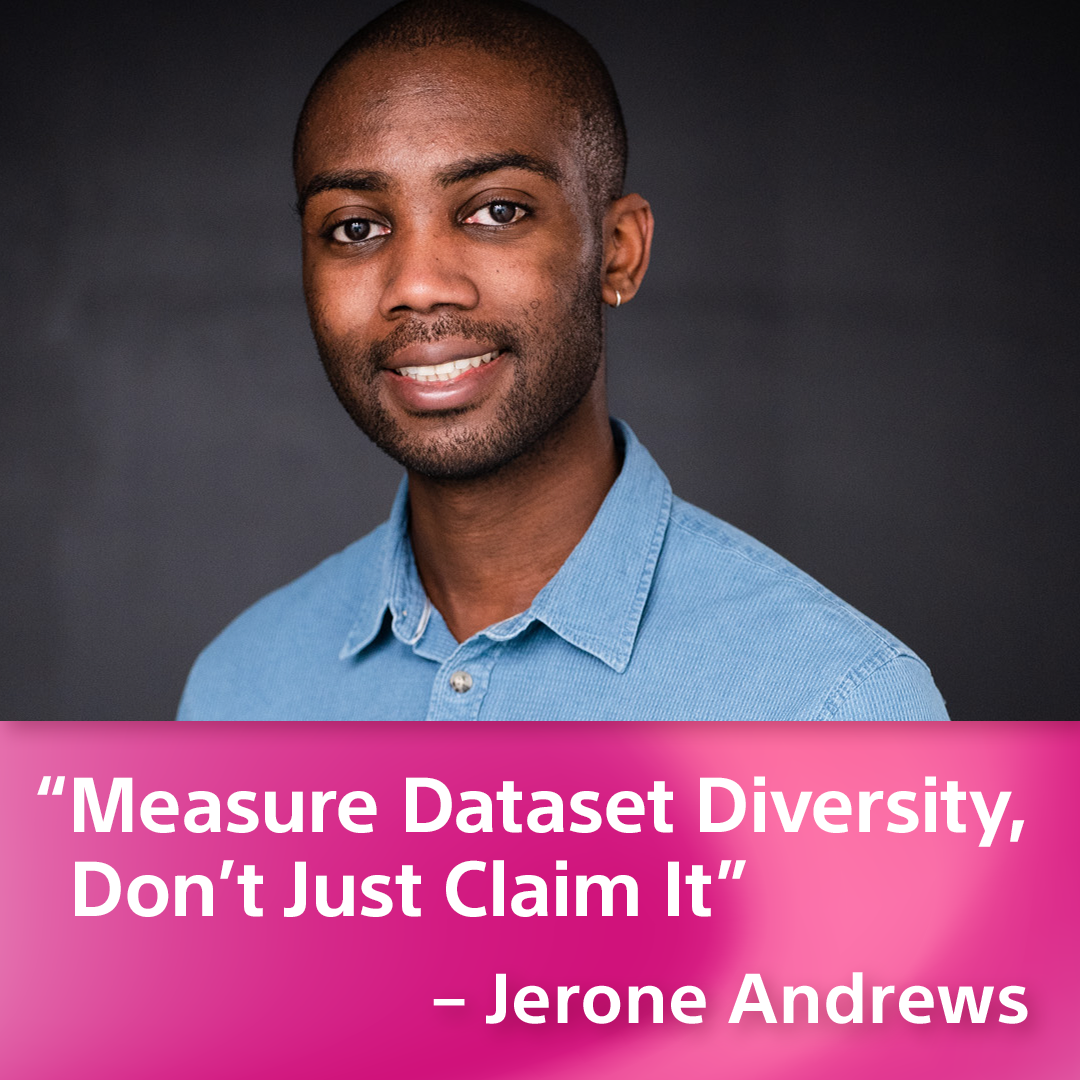

Meet Some of Our Team

Latest Publications

Machine learning (ML) datasets, often perceived as neutral, inherently encapsulate abstract and disputed social constructs. Dataset curators frequently employ value-laden terms such as diversity, bias, and quality to characterize datasets. Despite their prevalence, these ter…

The rapid and wide-scale adoption of AI to generate human speech poses a range of significant ethical and safety risks to society that need to be addressed. For example, a growing number of speech generation incidents are associated with swatting attacks in the United States…

In this paper, we propose an approach to obtain a personalized generative prior with explicit control over a set of attributes. We build upon MyStyle, a recently introduced method, that tunes the weights of a pre-trained StyleGAN face generator on a few images of an individu…

Latest Blog

Exploring the Challenges of Fair Dataset Curation: Insights from NeurIPS 2024

Sony AI’s paper accepted at NeurIPS 2024, "A Taxonomy of Challenges to Curating Fair Datasets," highlights the pivotal steps toward achieving fairness in machine learning and is a …

Mitigating Bias in AI Models: A New Approach with TAB

Artificial intelligence models, especially deep neural networks (DNNs), have proven to be powerful tools in tasks like image recognition and natural language processing. However, t…

Not My Voice! A Framework for Identifying the Ethical and Safety Harms of Speech…

In recent years, the rise of AI-driven speech generation has led to both remarkable advancements and significant ethical concerns. Speech generation can be a driver for accessibili…

JOIN US

Shape the Future of AI with Sony AI

We want to hear from those of you who have a strong desire

to shape the future of AI.