Sony AI 2025, Year in Review

Life at Sony AI

Sony AI

December 22, 2025

As the year comes to close, we’re reflecting not only on key research milestones, but on the scale of work achieved across our global teams. In 2025, we published 87 papers, contributed to 15 major conferences, and expanded collaborations across audio, imaging, reinforcement learning, ethics, analog design, and scientific discovery.

This past year, we are proud to have advanced our mission across our Flagship projects. The year’s work reaffirmed a consistent idea: Progress is strongest when technical rigor, responsible design, ethics, and creator-focused purpose move together.

Across our global teams, we saw meaningful steps forward. From building one of the field’s most comprehensive fairness benchmarks, to advancing sound, imaging, sensing, analog design, and adaptive agents. We also strengthened industry partnerships, including the Audiokinetic announcement, and expanded contributions across creator tools and sensing systems. Taken together, these efforts reflect a commitment to developing AI that is useful in the settings where people create, explore, and make decisions.

Building Responsible AI From the Foundations Up

When fairness begins at the dataset level

Early in the year, our ethics research returned to a foundational question: what changes when fairness starts with the data itself? Their work explored long-standing issues in human-centric computer vision: non-consensual scraping, representational gaps, and the paradox of being “unseen” yet still misrepresented by AI systems. It also introduced techniques such as Targeted Augmentations for Bias Mitigation (TAB) and formal methods for conceptualizing and measuring dataset diversity.

This research set the stage for one of the year’s defining contributions: the publication of FHIBE, the Fair Human-Centric Image Benchmark on the cover of Nature.

The publication generated global attention in outlets such as Engadget, The Register, Fierce Electronics, AIHub, and several others.

FHIBE: A new benchmark for consent-driven, globally representative evaluation

FHIBE was designed to help developers uncover bias before deployment. Built from more than 10,000 images collected with informed consent, fair pay, and privacy safeguards, FHIBE includes 1,234 intersectional identity groups and extensive annotations for tasks such as face detection, keypoint estimation, and vision–language evaluation. Its emphasis on participant autonomy (including revocable consent) marks a shift in how evaluation datasets can be built.

A companion story traced the three-year journey behind FHIBE, revealing the technical, legal, cultural, and operational decisions that shaped it. This included global vendor management, privacy workflows, annotation consistency, and balancing representation with real-world utility.

FHIBE drew significant attention, including being featured on the cover of Nature and in an accompanying Nature editorial, which stands as a signal of the critical nature of responsible data collection and a call to action for fundamental change. Even more exciting, we’re proud to share that Alice Xiang, Global Head of AI Governance at Sony Group Corporation, and Lead Research Scientist at Sony AI, has been featured as “Ones to Watch 2026” in their feature, ”Nature’s 10: Ten people who shaped science in 2025.”

Strengthening Tools for Creators Across Sound, Music, and Media

Sony AI’s research this year advanced how creators work with sound, music, and multimodal media. With the introduction of MMAudio for video-to-audio synthesis, August’s Audiokinetic announcement, and related work spanning music foundation models and protective AI, our teams explored new ways to generate, edit, and align sound across creative workflows.

New capabilities in music editing, mastering, effects modeling, and identification

In 2025, Sony AI’s work in music and sound centered on building creator-facing systems that are more resilient in real-world conditions. Across several conferences, the release of MMAudio, August’s Audiokinetic announcement, (and related publications on music foundation models and protective AI), our teams explored how multimodal learning can support the full lifecycle of audio creation.

At ISMIR 2025, this direction was reflected in research spanning text-guided music editing, inference-time mastering optimization, instrument-wise effects understanding, and more robust music identification under noisy conditions. Rather than treating these as isolated capabilities, the work collectively examined how AI systems can reduce technical friction while preserving creative intent. This work enables creators and rights holders to shape, refine, protect, and recognize music with greater precision and control.

In parallel, MMAudio extended this multimodal focus beyond music alone, demonstrating how joint training across text, audio, and video can improve temporal alignment and semantic coherence in video-to-audio generation. Together, these efforts reflect a broader shift toward foundation-level models and workflows that support sound, music, and media creation across modalities all while remaining grounded in real production and usage contexts.

Protecting creators and musical integrity in the age of AI

Sony AI also advanced a focused body of research in 2025 aimed at attribution, recognition, and protection, helping creators and rights holders better understand how music is generated, related, and preserved in AI-driven workflows.

This work spanned three complementary research directions:

Attribution through unlearning

Researchers explored how unlearning techniques can be used to trace which training examples most influenced a generated piece of music. This approach offers a pathway for making influence visible in large-scale generative music models, supporting accountability and attribution without relying on explicit metadata.

Read the research here: Large-Scale Training Data Attribution for Music Generative Models via Unlearning

Musical version recognition and similarity mapping

New contrastive learning methods examined how relationships between musical works can be identified at the segment level, rather than by comparing entire tracks. This enables more nuanced detection of musical versions, shared motifs, and creative lineage — even when works differ in arrangement or production.

Read the research here: Supervised contrastive learning from weakly-labeled audio segments for musical version matching

Evaluating the limits of audio watermarking

Researchers introduced benchmarks to test whether existing audio watermarking methods can survive real-world transformations such as compression, reverberation, and neural codecs. These evaluations highlighted where current approaches remain fragile, informing future directions for more resilient protection mechanisms.

Read the research here: A Comprehensive Real-World Assessment of Audio Watermarking Algorithms: Will They Survive Neural Codecs?

Together, this research examined how AI systems might better support attribution, recognition, and integrity in music—not by offering a single solution, but by identifying where technical progress is needed as generative tools become more widespread.

Supporting creators through new industry partnerships

In August, Sony AI and Audiokinetic announced Similar Sound Search, the first AI-powered audio-to-audio and text-to-audio search tool built into Wwise. This collaboration allows creators to search for sounds by example (not just by metadata) improving discovery, creative iteration, and production workflows.

Learn more:

Why Sony AI & Audiokinetic Chose PSE to Train Intelligent Audio Tools

Behind the Sound: How AI Is Enhancing Audio Search

Advancing Perception Through Imaging & Sensing

Imaging and sensing research in 2025 also expanded beyond traditional visual pipelines to address how perception systems are designed, deployed, and protected in real-world settings. This included work at the intersection of vision models, hardware constraints, and privacy-preserving machine learning—areas that increasingly shape how sensing systems operate at scale, from edge devices to embedded platforms.

Rethinking visual pipelines

One highlight was the Raw Adaptation Module (RAM), a parallel processing design for RAW sensor data. Unlike traditional RGB pipelines optimized for human perception, RAM fuses multiple attributes directly from the sensor, achieving state-of-the-art performance in low-light and adverse conditions.

At CVPR 2025, the Privacy-Preserving Machine Learning (PPML) team contributed research focused on compact, adaptable vision models designed for efficient deployment under resource and privacy constraints. This included Argus, a scalable vision foundation model that rethinks how perception systems can generalize across tasks while remaining lightweight and adaptable—qualities that are increasingly relevant for AI-assisted chip design, edge sensing, and privacy-aware visual systems. Together, this work reflects a broader view of imaging and sensing: not only improving what systems see, but how perception is optimized, deployed, and safeguarded in practice.

Sights on AI: Our interview series focusing on the minds behind Sony AI

In our Sights on AI interview, Daisuke Iso, who is a Staff Research Scientist at Sony AI and a senior leader within the Imaging & Sensing Flagship Project, reflected on how AI-optimized pipelines can expand perception itself. His team’s work spans low-light enhancement, RAW-to-RGB augmentation methods, and line-wise AI execution at the sensor level; approaches that aim to reshape how cameras acquire and interpret information.

In our next Sights on AI interview, we met with, Lingjuan Lyu, the Head of the Privacy-Preserving Machine Learning (PPML) team in the Imaging and Sensing Flagship at Sony AI. A globally recognized expert in privacy and security, she leads a team of scientists and engineers dedicated to advancing research in AI-privacy and security-related areas. In this blog, Lingjuan reflects on her journey into AI, the evolving challenges in privacy and security, and her team’s groundbreaking work at Sony AI.

Engineering Breakthroughs: AI for Chip Design

At MLCAD 2025, Sony AI researchers introduced two complementary tools aimed at long-standing challenges in analog circuit design.

- GENIE-ASI, a training-free approach that uses LLM reasoning to generate executable Python code for identifying analog subcircuits.

- Schemato, a fine-tuned LLM that converts SPICE netlists into human-readable LTSpice schematics with high connectivity accuracy.

Both tools improve interpretability and reduce iteration time. These works demonstrate how AI can collaborate with engineers by codifying reasoning and producing assets ready for expert review.

Reinforcement Learning and Adaptive Agents

Reinforcement learning (RL) remained another crucial research pillar. A feature published at the end of summer captured why RL continues to matter: unlike static-learning methods, RL agents learn through interaction, failure, and adaptation features necessary for systems operating in dynamic environments.

This year included progress across strategic RL algorithms, constraint-aware learning, and real-world applications such as GT Sophy. We highlighted the release of the Gran Turismo 7 Power Pack DLC, featuring Gran Turismo Sophy 3.0, which delivers more realistic, competitive on-track behavior across racing scenarios. A reminder that reinforcement learning research continues to ship into large-scale interactive environments.

Learn more: Spec III update, and Power Pack update.

In parallel, GT Sophy 2.1 expanded the scope of Sony AI’s racing agent, shifting emphasis from pure dominance toward realism, sportsmanship, and player experience. This evolution signaled a broader reframing of superhuman agents as collaborative systems rather than adversarial benchmarks.

Watch the YouTube trailer or read the press release to see how GT Sophy 2.1 is changing the landscape of AI agents.

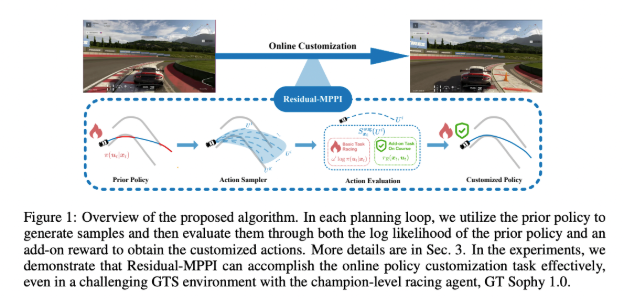

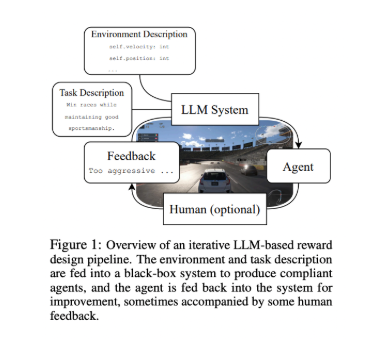

In 2025, Sony AI research also marked important milestones in advancing GT Sophy’s underlying learning framework. One line of work demonstrated how large language and vision-language models can automate reward design for autonomous racing, translating high-level, text-based goals into effective reward functions without manual tuning. This approach produced agents competitive with (and in some cases surpassing!) existing GT Sophy baselines, while significantly reducing the need for expert-crafted rewards.

Read the research here: Residual-MPPI: Online Policy Customization For Continuous Control

Complementing this, additional research advanced vision-based reinforcement learning for competitive racing. This work strengthens GT Sophy’s ability to reason about opponents, adhere to racing rules, and maintain robust performance under realistic multi-agent conditions. Together, these efforts reflect a shift toward more scalable, adaptable RL systems — extending GT Sophy from a landmark achievement into a continuously evolving research platform.

Read the research here: Automated Reward Design for Gran Turismo

A Year of Global Presence Across Leading Research Venues

Including AAAI, ICLR, CVPR, ICML, ACL, IJCNN, ICCV, ISMIR, MLCAD, SIGGRAPH, Interspeech, Indaba, CSCW, SXSW, and NeurIPS 2025.

Across these venues, our teams explored:

- - Multi-modal generation and evaluation

- - Efficient diffusion models and distillation

- - Training data attribution in generative modeling

- - RAW-domain perception

- - Federated and privacy-preserving learning

- - Robust audio identification

- - RL and control under real-world constraints

These contributions highlight the breadth of our research while reinforcing a shared focus: making AI systems more adaptive and more aligned with human needs.

At SXSW in March, Sony AI leaders discussed key topics in AI:

- President Michael Spranger offered his expert view on AI reaching a pivotal moment for exploring how AI research and technology can foster new creative possibilities without infringing on what has come before.

- Chief Scientist Peter Stone shared his perspective on how advanced robotics and AI can support creators, highlighting the importance of responsible development and genuine collaboration to ensure these technologies enhance human creativity rather than replace it.

Looking Ahead

As we prepare for 2026, several trajectories stand out:

- - Increasing integration of AI directly into sensing, imaging, and scientific workflows

- - Deeper collaboration across engineering, research, and creative communities

- - Advances in adaptive agents, creator tools, and responsible data practices

The year’s work reflects a broader commitment across Sony AI: to develop AI with clarity, care, and purpose. To build systems that empower creators, scientists, engineers, and communities.

Thank you for following our work throughout 2025. We look forward to sharing what comes next—connect with us on LinkedIn, Instagram, or X to stay in the know.

Latest Blog

February 2, 2026 | Sony AI

Advancing AI: Highlights from January

January set the tone for the year ahead at Sony AI, with work that spans foundational research, scientific discovery, and global engagement with the research community.This month’s…

January 30, 2026 | Sony AI

Sony AI’s Contributions at AAAI 2026

Sony AI’s Contributions at AAAI 2026AAAI 2026 is a reminder that progress in AI isn’t one straight line. This year’s Sony AI contributions span improving and enhancing continual le…

January 26, 2026 | Sony AI

How Sony AI’s Scientific Discovery Team is Reimagining How Researchers Evaluate …

In today’s research landscape, thousands of scientific papers are published each day; a metaphorical sea of knowledge. Even domain experts struggle to keep up. As Pablo Sánchez Mar…