The Race to Turn a World-class AI into a World Champion

111 days: an inside look at the final push to perfect Gran Turismo Sophy

GT Sophy

Game AI

October 4, 2022

GT SOPHY TECHNICAL SERIES

Starting in 2020, the research and engineering team at Sony AI set out to do something that had never been done before: create an AI agent that could beat the best drivers in the world at the PlayStation® 4 game Gran Turismo™ Sport, the real driving simulator developed by Polyphony Digital. In 2021, we succeeded with Gran Turismo Sophy™ (GT Sophy). This series explores the technical accomplishments that made GT Sophy possible, pointing the way to future AI technologies capable of making continuous real-time decisions and embodying subjective concepts like sportsmanship.

To compete at the highest level of esports automobile racing, GT Sophy had to master the arts of speed, racing skills, and sportsmanship. But perhaps the most daunting challenge on the road to victory was overcoming an unexpected loss in a high-stakes race. Following this setback, GT Sophy needed to go back to the drawing board, along with our whole research and development team.

Some background on what happened: To test GT Sophy against top-tier competitors, Sony AI, game developer Polyphony Digital, and Sony Interactive Entertainment organized an event pitting four copies of GT Sophy against four of the best human Gran Turismo drivers. While GT Sophy had some great moments at that competition in July 2021, the humans won the overall points championship (86 to 70) and exposed some crucial weaknesses in GT Sophy’s behavior. But in the October 2021 “rematch” with the same tracks, cars, and human competitors, an improved version of GT Sophy took the top two positions in every race and won the team competition decisively, 104-52. In between the two races, Sony AI needed to isolate the problems and develop the solutions that would make GT Sophy a champion. We had just 111 days to get things right.

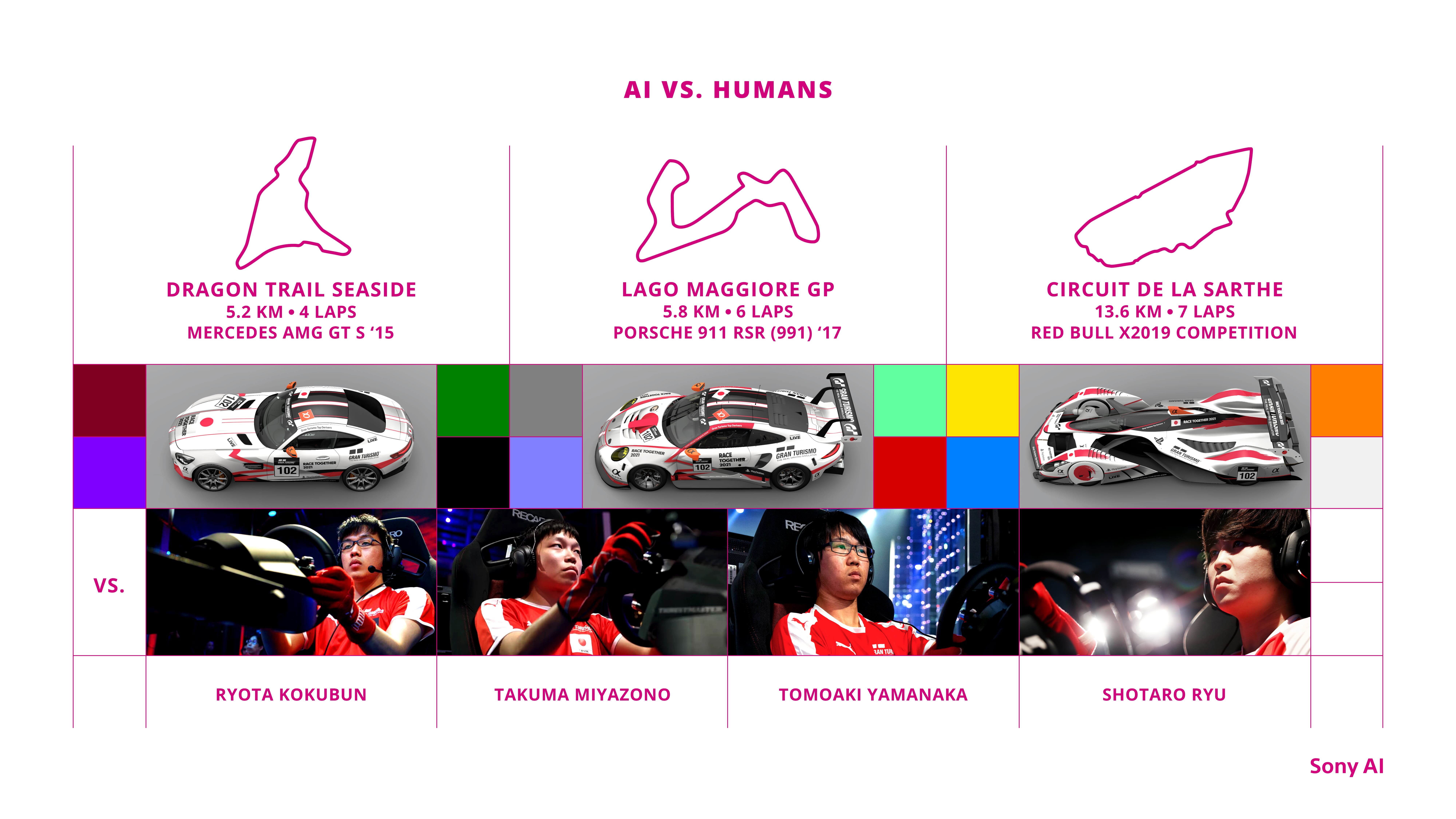

The Challenge The competitions took place at Polyphony Digital (PDI) headquarters in Tokyo, Japan. GT Sophy competed on three different courses against some of the world’s best human Gran Turismo drivers. Each race pitted four copies of GT Sophy, each in a different-color car, against the same four top-ranked players. Points were awarded for each race based on finishing positions (10 points for first and decreasing afterward) and were aggregated by team (humans versus AI). We called the event “Race Together”—a reference to humans and machines taking part in a shared challenge. The tracks and cars were chosen to test a variety of skills and driving styles:

- A four-lap race at Dragon Trail Seaside assessed control of road cars on a course where racers have to drive to the edge of the track (with walls!), leaving little margin for error.

- A six-lap race at Autodrome Lago Maggiore GP used FIA GT3 racing cars on a course with steep slopes and hairpin turns.

- The final challenge was a seven-lap race at Circuit de la Sarthe, modeled on the course used in the 24 Hours of Le Mans endurance race. The demanding Sarthe track tested precision racing with Formula 1–style cars and speeds of over 300 km/hour, and points there were doubled.

A four-lap race at Dragon Trail Seaside assessed control of road cars on a course where racers have to drive to the edge of the track (with walls!), leaving little margin for error.

A six-lap race at Autodrome Lago Maggiore GP used FIA GT3 racing cars on a course with steep slopes and hairpin turns.

The final challenge was a seven-lap race at Circuit de la Sarthe, modeled on the course used in the 24 Hours of Le Mans endurance race. The demanding Sarthe track tested precision racing with Formula 1–style cars and speeds of over 300 km/hour, and points there were doubled.

Racing on three different tracks, AI agent GT Sophy competed against four of the best human players of PlayStation game Gran Turismo.

Race Day 1: July 2, 2021 Heading into the first Race Together event, there were certainly plenty of unknowns. We knew GT Sophy was fast and had skills and sportsmanship, but the agent had never raced against this level of opponents. On race day, GT Sophy got everyone’s attention by taking first place in the first two events and holding a slim lead on points. But the race at Sarthe exposed the gap between the top humans and GT Sophy. The human team got off to a strong start, taking the first two positions with a GT Sophy car in third place, roughly a second behind the second-place car. GT Sophy did not close that gap until the last lap, even though it qualified with a solo lap time nearly 1.5 seconds faster than the humans. On the last lap, one AI racer, Sophy Orange, briefly caught and passed Tomoaki Yamanaka for second place, before Yamanaka took the position back with authority on the next segment. In addition, another AI car, Sophy Jaune, spun out unexpectedly at the back of the pack. The humans took the race 1-2-5-7, leading to an overall victory of 86-70.

The Postmortem The Sony AI team went back to the (virtual) lab to figure out what went wrong and uncovered three glaring weaknesses. For each of these issues, we not only diagnosed what caused the problem but also prescribed changes to GT Sophy’s world representation, training regimen, as well as the evaluation suite that we used to pick policies.

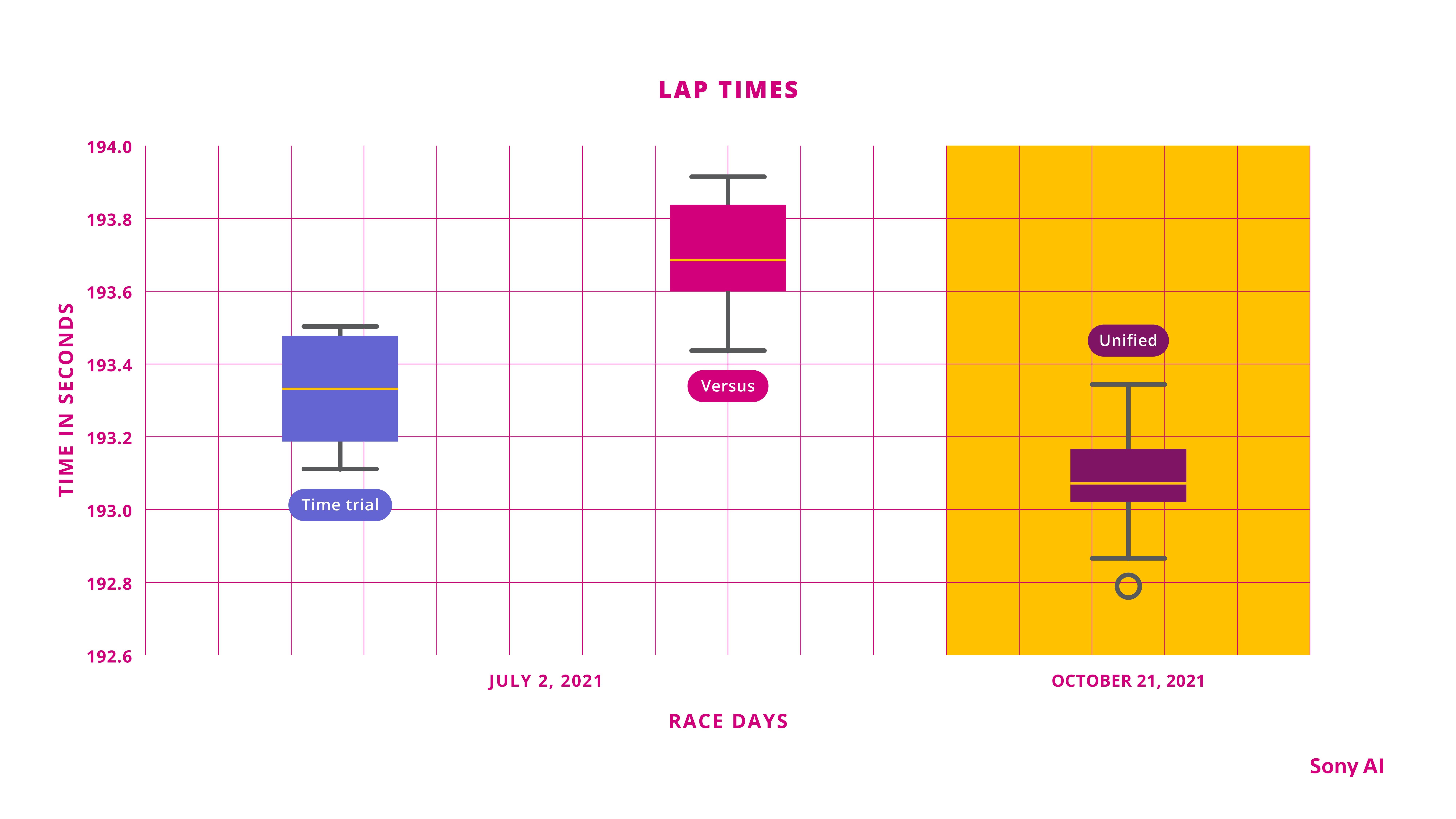

Problem 1: Why Did Sophy Spin Out? Sophy Jaune had a particularly bad race, flying off the course and spinning in the dirt on lap 6. We found the root cause of this spin in the telemetry logs. In particular, each July 2 agent actually had two policies that mapped their observations to actions: one for “time trial” racing when there were no other cars close by, and one for “versus” racing in traffic. The logs showed that Sophy Jaune switched mid-turn from the faster “time trial” policy to the “versus” policy, which expected to be driving a more conservative line in that section. The “versus” policy couldn’t handle the speed and position of the car, causing the spin.

What led to GT Sophy’s spinout? The agent faced difficulties as it switched mid-turn between two different policies, one faster and one more conservative.

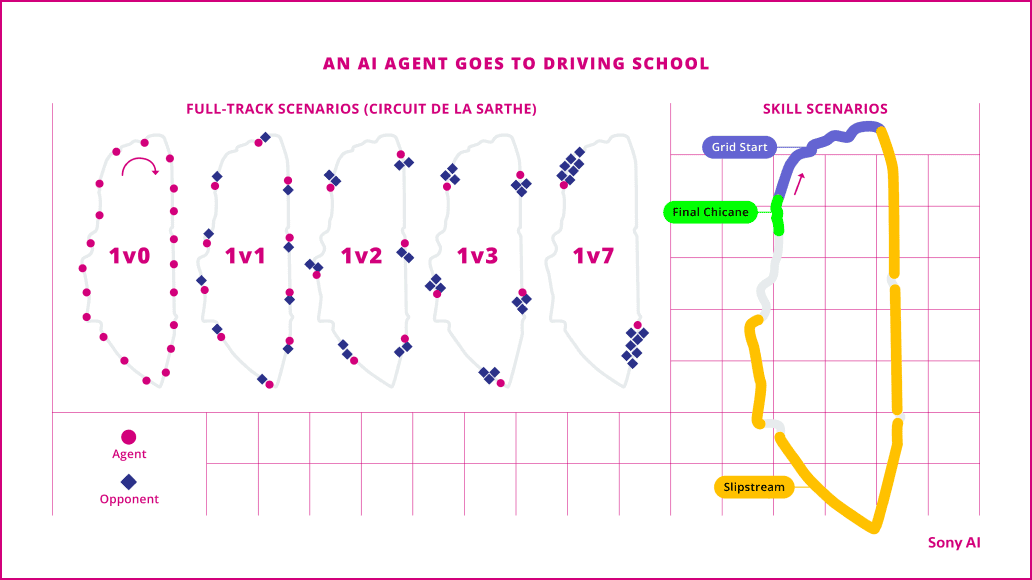

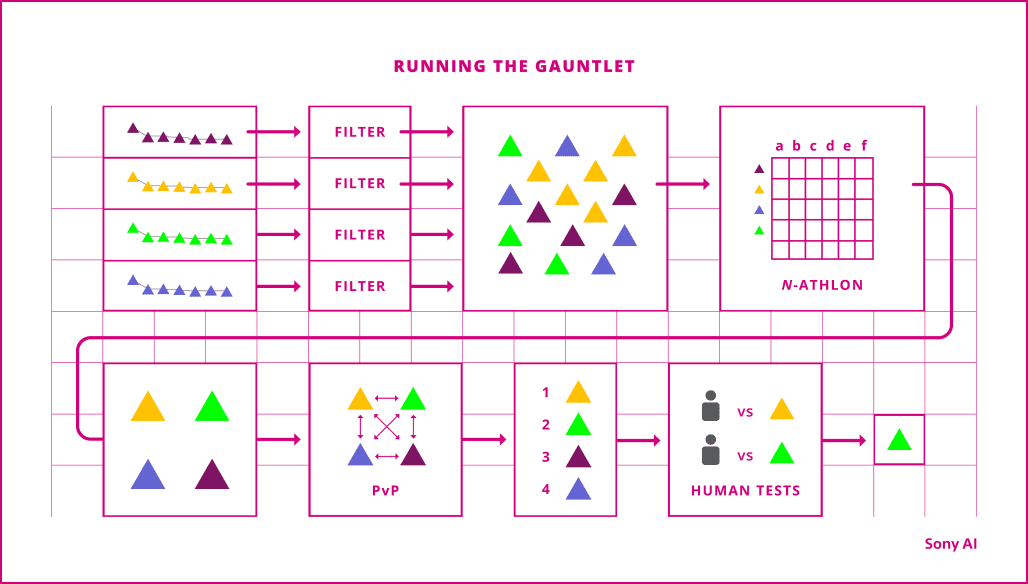

Solution 1: A Unified Policy Architecture: We unified the training architecture to produce a single policy. We originally used two different 3072 X 2 feed-forward network policies because we had not been able to make the “versus” policy fast enough to be competitive. After the event, we modified the architecture to use a single deeper 2048 x 4 network, which slowed down training but ultimately led to an agent that was as fast as the previous time trial agent and able to learn racing tactics. To ensure that this unified network could act in both solo and multi-car situations, we rebalanced the training scenarios to make sure both cases were appropriately covered.

In July 2021, GT Sophy used two different policies for racing—one designed for time trials, or driving with no other cars around, and another calibrated to race versus other competitors. For the October 2021 race, the Sony AI team scrapped that dual approach and created a single policy—which brought faster results.

Problem 2: Why Couldn’t GT Sophy Catch Up? The solo lap times for GT Sophy (in either time trial or versus mode) were in the 3:13 to 3:14 range, which should have been fast enough to close the gap on the two lead human cars. Yet something was clearly off. GT Sophy was not running as fast as expected, even when the cars in front of it were far enough away to pose no danger.

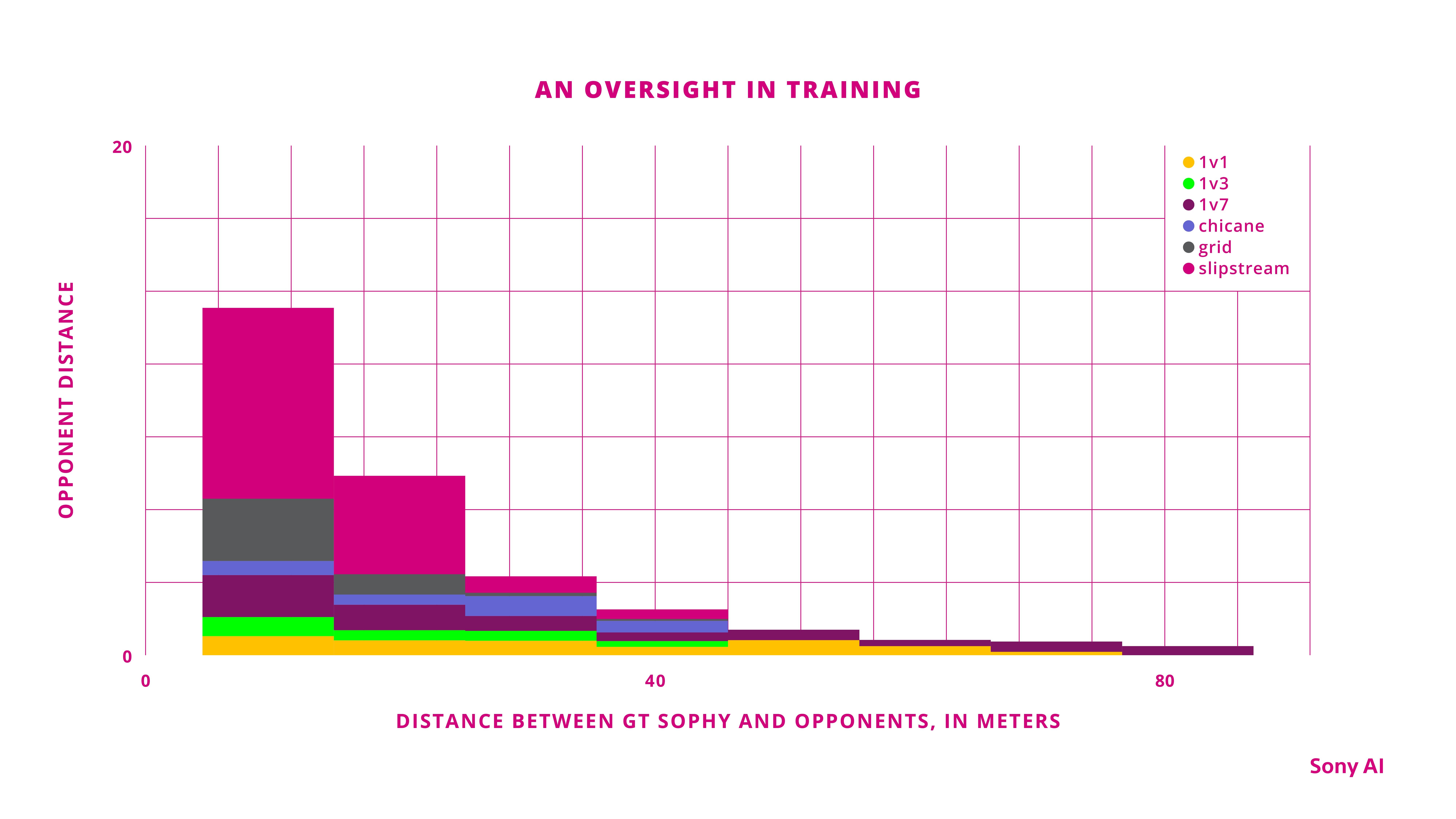

In training, GT Sophy experienced very few scenarios where competitors were 40 to 80 meters ahead. That lack of experience translated to slower-than-expected speeds in the July 2021 race.

One clue was that Sophy Jaune ran the fastest lap of the night (3:13.225) when it was all alone after the spinout, suggesting that its hesitant behavior could be tied to the presence of other cars. To check this hypothesis, we built a specific test that evaluated potential agents against an opponent placed roughly 60 meters ahead. In these tests, GT Sophy ran slower than expected, just like in the race. To diagnose why the agent was running so slow with opponents barely in view, we dove into GT Sophy’s experience replay buffer, where it stored its recent training experiences. Examining the data clarified that, in training, GT Sophy experienced very few situations where opponents were within a 30-75m range ahead. Further analysis showed that GT Sophy was overgeneralizing in these situations, and was specifically over-reliant on a feature that indicated when there was a car within 75m ahead instead of utilizing the more granular information about the opponent’s exact distance and speed.

In the July 2021 race, GT Sophy behaved hesitantly in situations where opponents were 40 to 80 meters ahead—a scenario it didn’t experience enough in training.

Solution 2: Updating Representation, Training, and TestingRepresentation: To focus GT Sophy on the more granular opponent features, we removed the general “car anywhere ahead/behind” variables that GT Sophy had been (erroneously) relying on. This information was essentially redundant given other features like the opponent distance, and it caused over-generalization.

Training: We introduced new scenarios to ensure GT Sophy had experience catching up to opponents in the 30-70m distances. In addition, we adjusted the proportions of the training scenarios to focus more on competitive situations and less on driving alone.

Testing: We added new “slowdown” tests to the policy evaluation suite for all tracks. These tests evaluated whether policies lost speed unnecessarily when an opponent was far ahead on the track.

Problem 3: Why Did Sophy Let an Opponent By? On the final lap of the July 2 race, Sophy Orange finally tracked down Yamanaka and made a textbook slingshot pass by riding in his slipstream until just the right moment, then speeding ahead. But Yamanaka got back in GT Sophy’s slipstream and made a strong pass on the final straightaway. While the pass was a fantastic demonstration of Yamanaka’s skill under pressure, the replays and logs clearly show that GT Sophy applied the brakes, letting him pass with ease and race away.

We built a test that specifically emulated this scenario and again inspected GT Sophy’s training data. These investigations uncovered many of the same culprits as the “catch up” issue, but they also uncovered a source unique to this problem: the opponent population. In training, GT Sophy raced against a population of AI opponents that couldn’t catch up when they were behind, meaning there was little training data on what to do when another player was making a pass. That left the agent ill-prepared to deal with a resilient human player like Yamanaka.

The Sony AI team worked to uncover why GT Sophy backed down and let a human racer pass it by.

Solution 3: Building Better Sparring Partners Training opponents: The Sony AI team improved the AI opponent population by building successively better agents and ensuring they were well-behaved sparring partners. Adding such opponents led to interactions more like the ones GT Sophy would see when racing the best humans.

Training / Testing changes: The modified training scenarios and updated opponent representation from Solution 2 also helped train GT Sophy to race better in traffic by providing more data and a clearer perspective on opponent interactions. We also added a new test to the evaluation suite that checked for timidness and braking in a situation just like Yamanaka’s pass, which helped the team discard policies that could not handle the pressure of a competitive pass attempt.

After fine-tuning GT Sophy, the Sony AI team and its partners held another series of races pitting the AI agent against top-ranked Gran Turismo players in October 2021.

Race Day 2: October 21, 2021 By October 21, GT Sophy was ready for a rematch. The Sony AI team had trained new agents using the updates mentioned above, as well as some additional hyperparameter tuning. We convened another race day using the same tracks and race cars as in the July event. The results validated all of our efforts to fine-tune GT Sophy’s performance. At both Seaside and Maggiore, GT Sophy finished 1-2-4-5 (aggregate score of 54-24) while displaying expert skills in traffic, including two GT Sophy agents running within inches of one another at Seaside without incident.

At Sarthe, where GT Sophy’s weaknesses were exposed in July, the humans took an early lead after a chaotic start, but the seven-lap race provided plenty of time for GT Sophy to catch up. This time, GT Sophy displayed all the skills it lacked in July. When it was alone, it ran the course at its physical limits without losing control. When it approached opponents, it maintained speed and made expert slipstream passes. And even when GT Sophy was passed, it maintained momentum. The most illustrative example came when Sophy Orange, which was in last place after the start, made a final pass for the lead on lap 4 in almost the same position where Yamanaka overtook GT Sophy on July 2. But this time it was GT Sophy making a decisive slipstream pass on Yamanaka, refusing to yield when the cars came nearly wheel-to-wheel, and driving away to victory.

Overall, GT Sophy took positions 1-2-5-6 (a score of 50-28) on the last course of the day, leading to a final aggregate score of 104-52. The investigations and design work by the Sony AI team had paid off. Using precise updates to the architecture, representation, training scenarios, training opponents, and evaluation suite, we built an AI that doubled the point total of some of the world’s most talented Gran Turismo racers.

In October 2021 on the Sarthe course, Sophy Orange seized the lead from human driver Tomoaki Yamanaka by making a skillful slipstream pass.

Looking Back As GT Sophy evolved, the agent went from not knowing how to drive in a straight line to racing with the world’s best esports drivers. The 111 days between the two Race Together competitions forced our team to evolve as well. We had to develop the diagnostic tools, engineering practices, and technological enhancements to understand and improve an agent that was already pushing the limits of human behavior. In the end, the competition forced us to not just make GT Sophy better but to improve as researchers and engineers, in order to close the final gap and ensure our agent could deliver a truly superhuman performance.

Latest Blog

February 2, 2026 | Sony AI

Advancing AI: Highlights from January

January set the tone for the year ahead at Sony AI, with work that spans foundational research, scientific discovery, and global engagement with the research community.This month’s…

January 30, 2026 | Sony AI

Sony AI’s Contributions at AAAI 2026

Sony AI’s Contributions at AAAI 2026AAAI 2026 is a reminder that progress in AI isn’t one straight line. This year’s Sony AI contributions span improving and enhancing continual le…

January 26, 2026 | Sony AI

How Sony AI’s Scientific Discovery Team is Reimagining How Researchers Evaluate …

In today’s research landscape, thousands of scientific papers are published each day; a metaphorical sea of knowledge. Even domain experts struggle to keep up. As Pablo Sánchez Mar…